Monthly Socials!

The organizers and volunteers of Boston VR are continuing our monthly socials at TimeOut Market.

The next social event is on January 6th at 7 pm.

Click here to RSVP!

Crafter’s Corner – Crafting what you see in VR-Part 3

Hello again from Crafter’s Corner, where we discuss how to create the experiences that people can enjoy in virtual and mixed reality. This will be the final part in our three-part series exploring Blender, the popular and free 3D design tool used to build the graphical elements that are imported into game engines to build the visual part of the VR experience. In the first part of the series, we discussed how to use Blender to build the shapes, or meshes, that compose the models shown in VR. In part 2, we reviewed the concept of materials and UVs, explaining how Blender takes colors and flat textures and applies them to the 3D meshes. To complete our review of Blender, we will turn now to the fundamentals of computer animation and how these tools use components of your new 3D models to make them move.

The simplest form of animation requires no more work in Blender. All three major game engines–Unity, Unreal, and Godot–are able to take the models that are exported from Blender using just meshes and materials and build animations from those models. These animation tools, along with the animation tool within Blender itself, all build on the same fundamental attributes, so we will discuss this first.

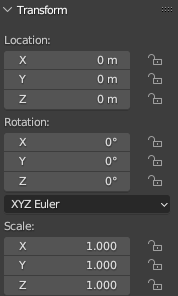

Location, rotation, and scale in Blender

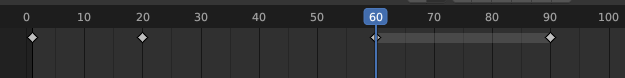

When a 3D model is placed in a VR scene by a game engine, three attributes are saved with the model: its position in the scene, the direction it is rotated in 3D, and its scale. Animations change those attributes over time to create moving objects. This is done by an animator building a timeline in the animation tool, with the initial position, rotation, and scale saved in the first frame of the timeline. To move, rotate, and/or grow or shrink the model in the scene, more “keyframes” are added to the timeline saving how the model should look at that point in the animation. The animator can also tweak how quickly the model changes from the previous keyframe to the next keyframe, to make it so that most of the changes happen early, late, or evenly spread out across the time between the two keyframes. Once the final keyframe has been added, the animation is complete, and the experience designer can use the context of the experience to tell the game engine when to run the animation, and how quickly it should run.

A simple animation with four keyframes in Blender

This may be sufficient for some simple 3D models, but as models grow more complex, game engines need more information to know how an animation makes changes to a model’s mesh. Consider, for example, the animation of a dog walking across a scene. Simple location, rotation, and scale information is not enough to convey the sense of a dog walking–all you can do with that information is slide or spin the dog, not something your viewer is likely looking for from your experience. To make a dog walk, the animator needs a skeleton with bones that can individually be shifted and rotated across many keyframes to craft an animation that will make the 3D model of a dog walk convincingly.

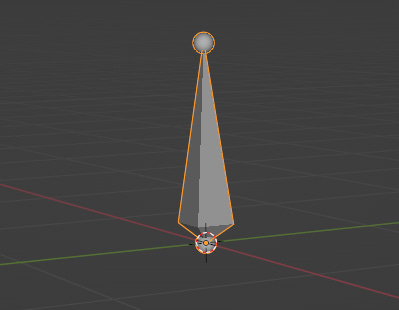

The first bone in a new armature in Blender

This is done in Blender by adding an “armature” to a model. When an armature is first created, it starts with a single “bone”. The animator uses similar controls as when refining the shape of the model in the modeling tool to extend the bones and relate them to each other, until an entire “skeleton” has been created in the armature. Once the skeleton has been built, the animator moves each bone within the mesh of the 3D model to where it would be most appropriate for the pose that the model is in without any changes. This is typically called a “T-pose” (with arms stuck straight out to the sides) or an “A-pose” (with arms pointed rigidly downward at an angle) for humanoid-style models and skeletons. The skeleton can have as many bones as is required for the quality of the animation needed for the experience. Generally each part of the model that may need to bend or turn independently will need a bone that can be controlled by the animation.

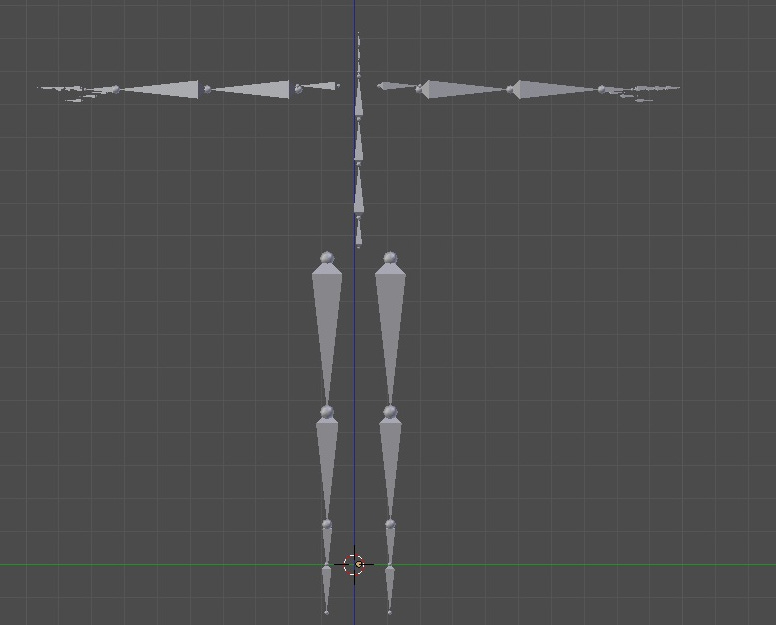

Once the skeleton is in place, the association of the skeleton with the model can be made. This is done by associating every vertex of the model with one or more bones in the skeleton. This may only need to be a single bone for rigid parts of the model, but may need to be more than one bone for more complex parts like simulating skin behavior around a bending joint. This can require a lot of work for models with a lot of vertices and/or bones, but if the models are following standard practice, many tools are available to make this process much faster. For example, Adobe’s Mixamo website shows how a standard humanoid skeleton can easily switch between many different kinds of humanoid 3D models and many kinds of animations while still looking natural.

A Mixamo skeleton in a T-pose in Blender

After the armature has been completed and is fully matched to the vertices of the 3D model, the animator has a choice. Since Blender and game engines all support building and running animations, the animator can build the animations for the model in Blender, or wait to build the animations in the game engine. If the animations are done in Blender, they are simply exported alongside the model and imported into the game engine to be run in the scene there.

When building animations for armatures, there are two different approaches to how to move related bones into position for keyframes: forward kinematics and inverse kinematics. Forward kinematics move the bones into position directly for each keyframe. Inverse kinematics allow the animator to select the endpoint for a bone chain, and the bone relationships calculate how they should bend to move to that endpoint. An armature can be designed to support both forward and inverse kinematics in its animations.

And with that, we have completed our tour of the basic functionality of Blender to build what users of your experience will see in your VR scenes. With study and practice, without spending any money anyone can craft 3D models, build and apply textures to the models, and build armatures that allow the models to be animated to do whatever a scene requires. I hope you have enjoyed this tour of the Blender tool and how it can be used to craft VR experiences. As always, if you have any questions or feedback on this or what you would like to see, please reach out via one of the methods below.

Justin is a long-time software engineer who specializes in cloud and VR development. He can be reached at https://archmag.us, justin@archmag.us or on Bluesky at https://bsky.app/profile/archmag.us

Video of the Month

from the YouTube Channel: ThrillSeeker

Have you read the sequel?

From Amazon.com:

#1 NEW YORK TIMES BESTSELLER • The thrilling sequel to the beloved worldwide bestseller Ready Player One, the near-future adventure that inspired the blockbuster Steven Spielberg film.

NAMED ONE OF THE BEST BOOKS OF THE YEAR BY THE WASHINGTON POST • “The game is on again. . . . A great mix of exciting fantasy and threatening fact.”—The Wall Street Journal

AN UNEXPECTED QUEST. TWO WORLDS AT STAKE. ARE YOU READY?

Days after winning OASIS founder James Halliday’s contest, Wade Watts makes a discovery that changes everything.

Hidden within Halliday’s vaults, waiting for his heir to find, lies a technological advancement that will once again change the world and make the OASIS a thousand times more wondrous—and addictive—than even Wade dreamed possible.

With it comes a new riddle, and a new quest—a last Easter egg from Halliday, hinting at a mysterious prize.

And an unexpected, impossibly powerful, and dangerous new rival awaits, one who’ll kill millions to get what he wants.

Wade’s life and the future of the OASIS are again at stake, but this time the fate of humanity also hangs in the balance.

Lovingly nostalgic and wildly original as only Ernest Cline could conceive it, Ready Player Two takes us on another imaginative, fun, action-packed adventure through his beloved virtual universe, and jolts us thrillingly into the future once again.

Buy it here

QQs=Quality Questions (worth pondering)

What if Boston’s first mixed reality cafe just opened its doors downtown-what would it look like? Augmented lattes? Holographic art?

What are three unique ways you can deeply reflect on your past year with XR to feel fully prepared for an epic new year ahead with the advancements in extended reality technologies?

What impact would it have on our everyday conversations if our speech were expressed with visuals while wearing augmented reality glasses?

-Chris

For more QQs to enrich and enhance your creative thinking, follow me on X @letsaskqqs

Stay tuned for our next newsletter for more updates, highlights, and community stories. Let's keep exploring the infinite possibilities of extended reality together!

Boston VR is committed to fostering a welcoming and innovative community for XR enthusiasts and professionals.

Whether you're a developer, creator, researcher, or just plain curious, we're excited to explore the future of XR with you.

For more detailed discussions and to share your thoughts or experiences, don't hesitate to join us at our next meetup or reach out through our community channels.

See you in the virtual world!

Join the Boston VR Slack

To join, click here

Would you like to spotlight your VR project to inspire creativity in our community? Email Chris at chris@bostonvr.org with a short description of your project and why it should be included in the newsletter.

Interested in Volunteering with Boston VR?

Email Casey We need help with marketing, event planning, dev jams, sponsorship, and more!

Thank you to our Premium Sponsor, AMXRA!

AMXRA is the premier medical society advancing the science and practice of medical extended reality, https://amxra.org/.

AMXRA has generously committed to being a premium sponsor for Boston VR over the coming years. We are immensely grateful for their support and partnership in exploring the forefront of XR technology.